DLP® Technology Powers Unique Volumetric Mixed Reality Display Created at UNC Chapel Hill

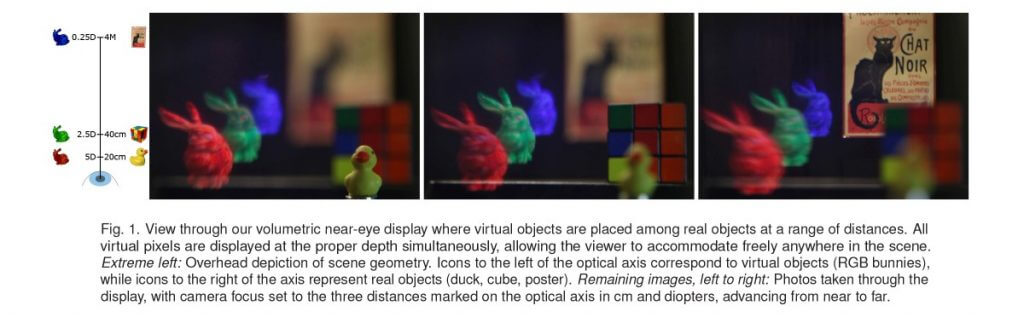

Joel Coffman2023-10-26T14:50:00+00:00All virtual objects are displayed with correct focus cues simultaneously at all distances, just like in the real world.

“The DMD projector is synchronized with the focus-tunable lens to display a stack of binary frames in each lens cycle, and the HDR illuminator illuminates the DMD chip with a distinct selected RGB color for each binary frame.”

If you’re a fan of augmented and mixed reality, you’re familiar with the term ‘near eye display,’ or NED. Immersive NEDs, like virtual reality glasses, take wearers into a completely virtual world, while see-through NEDs function as ‘smart glasses’ that allow wearers to view virtual images while interacting with the real world. The entire augmented reality (AR), virtual reality (VR), mixed reality (MR) space may seem a little like science fiction, but applications for see-through NEDs could fundamentally change the way we work, learn and communicate. As technology progresses, many developers are choosing to leverage Digital Light Processing (DLP®) technology and the high-speed switching capabilities of its digital micromirror device (DMD) to improve the NED experience.

A research team at the University of North Carolina (UNC) at Chapel Hill – the UNC Graphics & Virtual Reality Group, lead by Dr. Henry Fuchs alongside grad students Alex Blate, Kishore Rathinavel and Hanpeng Wang – takes mixed reality itself a step further, developing a new class of displays they’re calling a volumetric near-eye display. With a goal of a truly immersive see-through NED experience in mind, the UNC Chapel Hill team focused on the mismatch between two cross-coupled physiological processes affecting depth perception within the human eye – vergence (centering a fixated object on our eye’s fovea) and accommodation (bringing a fixated object into focus on our eye’s retina). Our eyes have all been trained to automatically adjust their optical focus based on the perceived distance to an object that they’re looking at – when our brain receives mismatching cues between the distance of a virtual 3D object and the focusing distance, it can contribute to not only focus issues but visual fatigue and eyestrain. The team proposed a DLP-based solution that projects a display constantly sweeping across 280 synchronized binary images. The team’s current prototype utilizes a DLi4130 .7″ XGA High-Speed Development Kit – a DLP Discovery 4100-based kit featuring the high-speed visible DLP7000 chipset – along with a RAY-7A Fiber Optics Module.

“We aim to make these mixed reality displays more comfortable, because a user looks at different distances in the real world and expects there to be augmented objects at different distances as well,” says team member Kishore Rathinavel. “For example, a user may hold out his/her hand and see a virtual teapot on it, and a moment later the user might look across the street and see his/her future home under design displayed as a virtual object next to other real houses. Current commercial AR displays aren’t able to display objects across such a large depth-range with good depth resolution – we address this limitation. We have currently demonstrated 15cm to 4m (constrained by our lab space) but could be extended later to 20m or more without compromising resolution, display frame-rate, or color bit-depth.”

The DLP optical system, combined with a high dynamic range light source, illuminates virtual images with a distinct color and brightness for each of the 280 “slices” of the image, so as a focus-tunable lens continuously sweeps across the series of images at high speed, our eyes can accommodate freely in a range of 15cm to 4m. Similar multifocal displays have been explored by other developers, but never with a continuous sweep of images – past iterations required a focus-tunable lens to briefly “settle” in each focus state. The UNC Chapel Hill team developed a unique algorithm to render the images by leveraging this continuous motion, mimicking the focus of a human eye and presenting full-color volume.

In addition to supporting current volumetric display implementation, this team’s proposed system can emulate varifocal displays and previous multifocal displays – this could allow the system to become a test-bed for future perceptual studies on accommodation.

Click the Read More button for the full paper, “An Extended Depth-at-Field Volumetric Near-Eye Augmented Reality Display.”